| Kinect v1 | Kinect v2 | ||

|---|---|---|---|

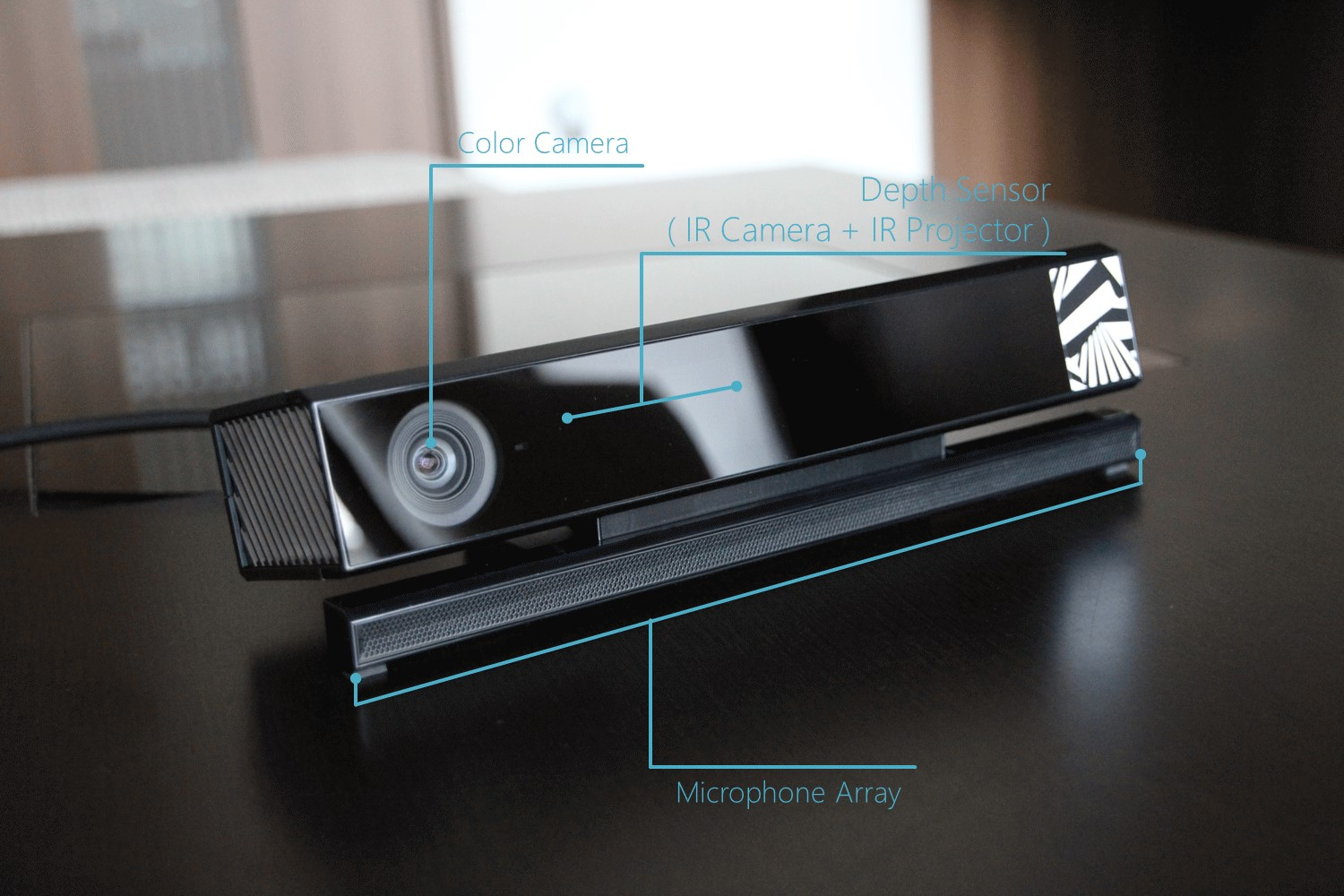

| 颜色(Color) | 分辨率(Resolution) | 640×480 | 1920×1080 |

| fps | 30fps | 30fps | |

| 深度(Depth) | 分辨率(Resolution) | 320×240 | 512×424 |

| fps | 30fps | 30fps | |

| 人物数量(Player) | 6人 | 6人 | |

| 人物姿势(Skeleton) | 2人 | 6人 | |

| 関節(Joint) | 20関節/人 | 25関節/人 | |

| 手的開閉状態(Hand State) | △(Developer Toolkit) | ○(SDK) | |

| 检测範囲(Range of Detection) | 0.8~4.0m | 0.5~4.5m | |

| 角度(Angle)(Depth) | 水平(Horizontal) | 57度 | 70度 |

| 垂直(Vertical) | 43度 | 60度 | |

| (Tilt Motor) | ○ | ×(手動) | |

| 複数的App | ×(単一的App) | ○ | |

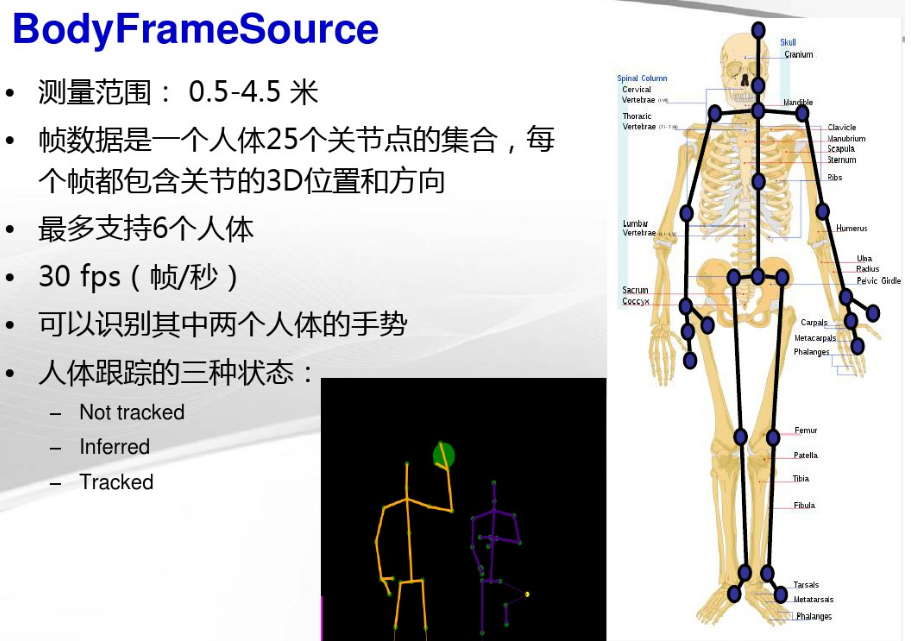

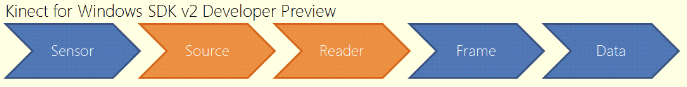

Kinect2.0的数据获取流程如下图所示:

参考Kinect for Windows v2.0 SDK中的Body Basics-D2D(C++ Direct2D sample)示例程序,可以自己构建一个基本的骨骼追踪程序。

被”骨骼跟踪”的用户位置由摄像机坐标系下的X、Y、Z坐标表示,不同于彩色图像空间坐标系,该坐标系是三维的,以米为单位。Z轴表示红外摄像头光轴,与图像平面垂直。Kinect传感器的位置会影响骨骼坐标。如果Kinect被放置在非水平面上或者有可能通过传动马达调整有效视角范围。在这种情况下,Y轴就不是垂直于水平面的,或者说与重力方向不平行,那么计算得到的坐标系可能并非是标准形式。因此,在最终的图像中,尽管人笔直地站立,但却显示为倾斜的。

为了在屏幕上显示关节点,需要进行Kinect空间坐标转换,下面代码将摄像机空间坐标转换为深度图像坐标:

CameraSpacePoint cameraSpacePoint = { x, y, z }; // 获取到的关节坐标

m_pCoordinateMapper -> MapCameraPointToDepthSpace(cameraSpacePoint, &depthSpacePosition[i]); // 转换到深度图像坐标系

- Camera space

Camera space refers to the 3D coordinate system used by Kinect. The coordinate system is defined as follows:

- The origin (x=0, y=0, z=0) is located at the center of the IR sensor on Kinect

- X grows to the sensor’s left

- Y grows up (note that this direction is based on the sensor’s tilt)

- Z grows out in the direction the sensor is facing

- 1 unit = 1 meter

[The camera space coordinate system]

Any Kinect Tracking algorithm that operates in 3D (e.g. Skeletal Tracking) stores its results in camera space. In some cases, you might want to project one of these points to a row/column location on the depth image for further processing. In that case, you would be mapping from camera space to depth space.

- Depth space

Depth space is the term used to describe a 2D location on the depth image. Think of this as a row/column location of a pixel where x is the column and y is the row. So x=0, y=0 corresponds to the top left corner of the image and x=511, y=423 (width-1, height-1) is the bottom right corner of the image. In some cases, a z value is needed to map out of depth space. For these cases, simply sample the depth image at the row/column in question, and use that value (which is depth in millimeters) directly as z.

- Color space

The color sensor on Kinect is offset a bit from the sensor that generates depth and infrared. As a result, the depth sensor and the color sensor see a slightly different view of the world. If you want to find the color that corresponds to given pixel on the depth image, you’ll have to convert its position to color space. To color space describes a 2D point on the color image, just like depth space does for the depth image. So a position in color space is a row/column location of a pixel on the image, where x=0, y=0 is the pixel at the top left of the color image, and x=1919, y=1079 (width-1, height-1) corresponds to the bottom right.

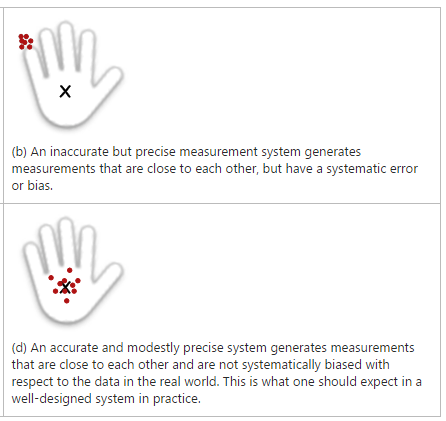

与任何测量系统一样,Kinect获取到的关节坐标数据包含了许多噪声。影响噪声特性和大小的因素有很多(room lighting; a person’s body size; the person’s distance from the sensor array; the person’s pose (for example, for hand data, if the person’s hand is open or fisted); location of the sensor array; quantization noise; rounding effects introduced by computations; and so on)。误差从来源看可分为系统误差和偶然误差,如下图b所示为系统误差(由于仪器本身不精确,或实验方法粗略,或实验原理不完善而产生的),要减小系统误差,必须校准测量仪器,改进实验方法,设计在原理上更为完善的实验。图d为偶然误差(由各种偶然因素而产生的),偶然误差总是有时偏大,有时偏小,并且偏大偏小的概率相同。因此,可以多进行几次测量,求出几次测得的数值的平均值,这个平均值比一次测得的数值更接近于真实值。由于噪声的性质各不相同,适用的滤波方法也不一样。因此,Kinect开发者需要结合多种滤波方法来获得满意的效果。

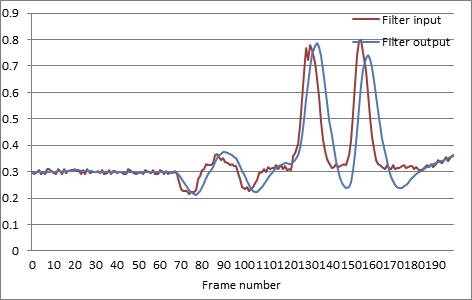

滤波会带来一定的延迟,The joint filtering latency is how much time it takes for filter output to catch up to the actual joint position when there is a movement in a joint. User research shows that 72% of people start noticing this delay when latency is more than 100 msec, and therefore, it is suggested that developers aim for an overall latency of 100 msec

[latency added by joint filtering is the lag between output and input when there is movement in input data]

A useful technique to reduce latency is to tweak the joint filter to predict the future joint positions. That is, the filter output would be a smoothed estimate of joint position in subsequent frames. If forecasting is used, then joint filtering would reduce the overall latency. However, since the forecasted outputs are estimated from previous data, the forecasted data may not always be accurate, especially when a movement is suddenly started or stopped. Forecasting may propagate and magnify the noise in previous data to future data, and hence, may increase the noise.

One should understand how latency and smoothness affect the user experience, and identify which one is more important to create a good experience. Then, carefully choose a filtering method and fine-tune its parameters to match the specific needs of the application. In most Kinect applications, data output from the ST system is used for a variety of purposes. Joints have different characteristics from one another in terms of how fast they can move, or how they are used to create the overall experience. For example, in some applications, a person’s hands can move much faster than the spine joint, and therefore, one needs to use different filtering techniques for hands than the spine and other joints. So, there is no filtering solution that fits the needs of all use cases for all joints.

The joint filter implementation in a typical application receives joint positions from ST as input in each frame, and returns the filtered joint positions as output. The filter treats each joint’s x, y, and z coordinates independently from other joints or other dimensions. That is, a filter is independently applied to the x, y, and z coordinate of each joint separately—and potentially each with different filtering parameters. Note that, though it is typical to directly filter the Cartesian position data returned by ST, one can apply the same filtering techniques to any data calculated from joint positions.

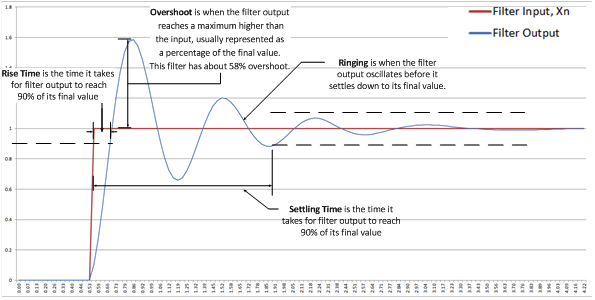

为了能更好地理解各种滤波方法和设置滤波参数,我们可以研究滤波器的阶跃信号和正弦信号的时间响应:

[Typical response of a filter to step function input]

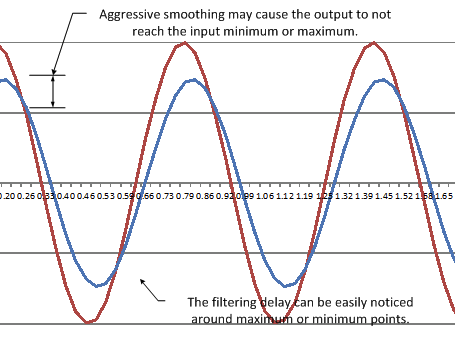

Rise time shows how quickly the filter catches up with sudden changes in input, while overshoot, ringing, and settling time are indications of how well a filter can settle down after it has responded to a sudden change in input. A filter’s response to a step function does not reveal all of the useful characteristics of a filtering technique, because it only shows the filter’s response to sudden changes. It is also useful to study a filter’s response to sine waveform input, both in time and frequency domains.

[Typical response of a filter to sine waveform input]

骨骼数据滤波方法有多种:

- ARMA模型(auto regressive moving average model)自回归滑动平均模型

-

一次移动平均平滑

-

二次移动平均平滑

-

一次指数平滑

-

二次指数平滑

-

Adaptive Double Exponential Smoothing Filter

-

中值滤波(Median Filter)

-

Taylor Series Filter

- Savitzky-Golay 滤波器

-

Jitter Removal Filter

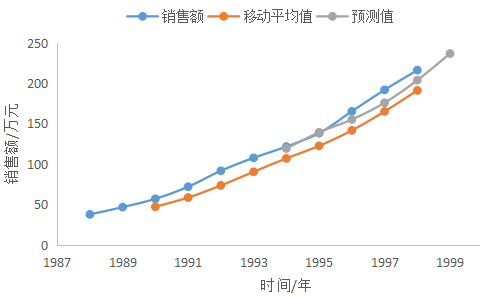

最简单的就是一次移动平均滤波,但一次移动平滑法只适用于水平型历史数据的预测,而对有明显趋势的数据会产生较大的系统误差。例如,某零售企业食品部1988-1998年的销售额资料如下表所示:

首先根据已知资料绘制散点图。由图中可看到销售额逐年增加,若用简单的一次移动平均法预测,就会出现预测值与实际值的滞后现象:

可以采用趋势修正移动平均法进行预测来消除滞后,趋势修正移动平均法(二次移动平均法)是指在简单移动平均法或加权移动平均法的基础上,计算变动趋势值,并对变动趋势值进行移动平均,求出若干期的变动趋势平均值,再利用此趋势平均值修正简单移动平均或加权移动平均预测值,以消除原预测值的滞后影响的一种计算方法。

变动趋势的计算公式为:$b_t=S_t-S_{t-1}$

式中:$b_t$--第t期的变动趋势值;$S_t$--第t期的移动平均值(平滑值);$S_{t-1}$--第t-1期的移动平均值。

利用变动趋势值进行预测时,可按下述模型:

$$F_{t+T}=S_t+T \cdot \bar{b_t}$$

式中:$F_{t+T}$--预测值;T--间隔期数;$\bar{b_t}$--平均变动趋势值。

选择N=3进行移动平均,计算出移动平均值,趋势值以及平均趋势值。根据表中的数据,要预测1999年的销售额,计算公式为:

$$F_{t+T}=S_t+T \cdot \bar{b_t}=191.3+2 \times 22.833=236.967$$

由此可看出,对于总体有上升或下降趋势的时间序列,由于采用了变动趋势修正移动平均法进行预测,消除了滞后或超前的偏差,能够较真实地反映出事物发展的规律。

在时间序列数据呈现线性趋势时,移动平均值总是落后于观察值数据的变化。二次移动平均法,正是要纠正这一滞后偏差,建立预测目标的线性时间关系数学模型,求得预测值。二次移动平均预测法解决了预测值滞后于实际观察值的矛盾,适用于有明显趋势变动的市场现象时间序列的预测, 同时它还保留了一次移动平均法的优点。

二次指数平滑法的基本思想与二次移动平均法一致,Kinect for Windows 1.5, 1.6, 1.7, 1.8 SDK中关节数据平滑函数NuiTransformSmooth就是利用了Holt双参数线性指数平滑法。Kinect SDK2.0中不再包含现成的平滑方法,需要自己去实现,可以参考How to use Joint Smoothing和Skeletal Joint Smoothing White Paper

Parameters

TRANSFORM_SMOOTH_PARAMETERS

Correction:修正参数,值越小,修正越多;fSmoothing:平滑参数,设置处理骨骼数据帧时的平滑量。值越大,平滑的越多,0表示不进行平滑;

JitterRadius:抖动半径参数,设置修正的半径。如果关节点“抖动”超过了设置的这个半径,将会被纠正到这个半径之内。(Jitter removal limits changes of each frame in order to dampen the spikes);

Prediction: 预测超前期数,增大该值能减小滤波的延迟,但是会对突然的运动更敏感,容易造成过冲(可以设置合理的最大偏离半径减轻该问题);

MaxDeviationRadius:最大偏离半径参数,用来和抖动半径一起来设置抖动半径的最大边界。

使用霍尔特双参数指数平滑法来平滑关节数据减小抖动,可以参考下面的代码(Jitter filter和初始化那几行没看懂,好像根上面的描述有冲突,代码中把与上次平滑值偏差较大超过JitterRadius的点当作有效点)

KinectJointFilter.h

//-------------------------------------------------------------------------------------- // KinectJointFilter.h // // This file contains Holt Double Exponential Smoothing filter for filtering Joints // // Copyright (C) Microsoft Corporation. All rights reserved. //-------------------------------------------------------------------------------------- #pragma once #include <Windows.h> #include <Kinect.h> #include <DirectXMath.h> #include <queue> typedef struct _TRANSFORM_SMOOTH_PARAMETERS { FLOAT fSmoothing; // [0..1], lower values closer to raw data FLOAT fCorrection; // [0..1], lower values slower to correct towards the raw data FLOAT fPrediction; // [0..n], the number of frames to predict into the future FLOAT fJitterRadius; // The radius in meters for jitter reduction FLOAT fMaxDeviationRadius; // The maximum radius in meters that filtered positions are allowed to deviate from raw data } TRANSFORM_SMOOTH_PARAMETERS; // Holt Double Exponential Smoothing filter class FilterDoubleExponentialData { public: DirectX::XMVECTOR m_vRawPosition; DirectX::XMVECTOR m_vFilteredPosition; DirectX::XMVECTOR m_vTrend; DWORD m_dwFrameCount; }; class FilterDoubleExponential { public: FilterDoubleExponential() { Init(); } ~FilterDoubleExponential() { Shutdown(); } void Init( FLOAT fSmoothing = 0.25f, FLOAT fCorrection = 0.25f, FLOAT fPrediction = 0.25f, FLOAT fJitterRadius = 0.03f, FLOAT fMaxDeviationRadius = 0.05f ) { Reset( fSmoothing, fCorrection, fPrediction, fJitterRadius, fMaxDeviationRadius ); } void Shutdown() { } // Reset the filter when a skeleton is lost void Reset( FLOAT fSmoothing = 0.25f, FLOAT fCorrection = 0.25f, FLOAT fPrediction = 0.25f, FLOAT fJitterRadius = 0.03f, FLOAT fMaxDeviationRadius = 0.05f ) { assert( m_pFilteredJoints ); assert( m_pHistory ); m_fMaxDeviationRadius = fMaxDeviationRadius; // Size of the max prediction radius Can snap back to noisy data when too high m_fSmoothing = fSmoothing; // How much smothing will occur. Will lag when too high m_fCorrection = fCorrection; // How much to correct back from prediction. Can make things springy m_fPrediction = fPrediction; // Amount of prediction into the future to use. Can over shoot when too high m_fJitterRadius = fJitterRadius; // Size of the radius where jitter is removed. Can do too much smoothing when too high memset( m_pFilteredJoints, 0, sizeof( DirectX::XMVECTOR ) * JointType_Count ); memset( m_pHistory, 0, sizeof( FilterDoubleExponentialData ) * JointType_Count ); } void Update( IBody* const pBody ); void Update( Joint joints[] ); inline const DirectX::XMVECTOR* GetFilteredJoints() const { return &m_pFilteredJoints[0]; } private: DirectX::XMVECTOR m_pFilteredJoints[JointType_Count]; FilterDoubleExponentialData m_pHistory[JointType_Count]; FLOAT m_fSmoothing; FLOAT m_fCorrection; FLOAT m_fPrediction; FLOAT m_fJitterRadius; FLOAT m_fMaxDeviationRadius; void Update( Joint joints[], UINT JointID, TRANSFORM_SMOOTH_PARAMETERS smoothingParams ); };

KinectJointFilter.cpp

//-------------------------------------------------------------------------------------- // KinectJointFilter.cpp // // This file contains Holt Double Exponential Smoothing filter for filtering Joints // // Copyright (C) Microsoft Corporation. All rights reserved. //-------------------------------------------------------------------------------------- //#include "stdafx.h" #include "KinectJointFilter.h" using namespace DirectX; //------------------------------------------------------------------------------------- // Name: Lerp() // Desc: Linear interpolation between two floats //------------------------------------------------------------------------------------- inline FLOAT Lerp( FLOAT f1, FLOAT f2, FLOAT fBlend ) { return f1 + ( f2 - f1 ) * fBlend; } //-------------------------------------------------------------------------------------- // if joint is 0 it is not valid. //-------------------------------------------------------------------------------------- inline BOOL JointPositionIsValid( XMVECTOR vJointPosition ) { return ( XMVectorGetX( vJointPosition ) != 0.0f || XMVectorGetY( vJointPosition ) != 0.0f || XMVectorGetZ( vJointPosition ) != 0.0f ); } //-------------------------------------------------------------------------------------- // Implementation of a Holt Double Exponential Smoothing filter. The double exponential // smooths the curve and predicts. There is also noise jitter removal. And maximum // prediction bounds. The paramaters are commented in the init function. //-------------------------------------------------------------------------------------- void FilterDoubleExponential::Update( IBody* const pBody ) { assert( pBody ); // Check for divide by zero. Use an epsilon of a 10th of a millimeter m_fJitterRadius = XMMax( 0.0001f, m_fJitterRadius ); //Returns the larger of the two input objects TRANSFORM_SMOOTH_PARAMETERS SmoothingParams; Joint joints[JointType_Count]; pBody->GetJoints( _countof(joints), joints ); for( INT i = 0; i < JointType_Count; i++ ) { SmoothingParams.fSmoothing = m_fSmoothing; SmoothingParams.fCorrection = m_fCorrection; SmoothingParams.fPrediction = m_fPrediction; SmoothingParams.fJitterRadius = m_fJitterRadius; SmoothingParams.fMaxDeviationRadius = m_fMaxDeviationRadius; // If inferred, we smooth a bit more by using a bigger jitter radius Joint joint = joints[i]; if( joint.TrackingState == TrackingState::TrackingState_Inferred ) { SmoothingParams.fJitterRadius *= 2.0f; SmoothingParams.fMaxDeviationRadius *= 2.0f; } Update( joints, i, SmoothingParams ); } } void FilterDoubleExponential::Update( Joint joints[] ) { // Check for divide by zero. Use an epsilon of a 10th of a millimeter m_fJitterRadius = XMMax( 0.0001f, m_fJitterRadius ); TRANSFORM_SMOOTH_PARAMETERS SmoothingParams; for( INT i = 0; i < JointType_Count; i++ ) { SmoothingParams.fSmoothing = m_fSmoothing; SmoothingParams.fCorrection = m_fCorrection; SmoothingParams.fPrediction = m_fPrediction; SmoothingParams.fJitterRadius = m_fJitterRadius; SmoothingParams.fMaxDeviationRadius = m_fMaxDeviationRadius; // If inferred, we smooth a bit more by using a bigger jitter radius Joint joint = joints[i]; if( joint.TrackingState == TrackingState::TrackingState_Inferred ) { SmoothingParams.fJitterRadius *= 2.0f; SmoothingParams.fMaxDeviationRadius *= 2.0f; } Update( joints, i, SmoothingParams ); // 对每个关节数据分别进行平滑滤波 } } void FilterDoubleExponential::Update( Joint joints[], UINT JointID, TRANSFORM_SMOOTH_PARAMETERS smoothingParams ) { XMVECTOR vPrevRawPosition; // x(t-1) XMVECTOR vPrevFilteredPosition; // 前一期平滑值S(t-1) XMVECTOR vPrevTrend; // 前一期趋势值b(t-1) XMVECTOR vRawPosition; // 实际值x(t) XMVECTOR vFilteredPosition; // 平滑值S(t) XMVECTOR vPredictedPosition; // 预测值F(t+T) XMVECTOR vTrend; // 趋势值b(t) XMVECTOR vDiff; XMVECTOR vLength; FLOAT fDiff; BOOL bJointIsValid; const Joint joint = joints[JointID]; vRawPosition = XMVectorSet( joint.Position.X, joint.Position.Y, joint.Position.Z, 0.0f ); vPrevFilteredPosition = m_pHistory[JointID].m_vFilteredPosition; // 前一期平滑值S(t-1) vPrevTrend = m_pHistory[JointID].m_vTrend; // 前一期趋势值b(t-1) vPrevRawPosition = m_pHistory[JointID].m_vRawPosition; // x(t-1) bJointIsValid = JointPositionIsValid( vRawPosition ); // If joint is invalid, reset the filter if( !bJointIsValid ) { m_pHistory[JointID].m_dwFrameCount = 0; } // Initial start values if( m_pHistory[JointID].m_dwFrameCount == 0 ) { vFilteredPosition = vRawPosition; vTrend = XMVectorZero(); m_pHistory[JointID].m_dwFrameCount++; } else if( m_pHistory[JointID].m_dwFrameCount == 1 ) { vFilteredPosition = XMVectorScale( XMVectorAdd( vRawPosition, vPrevRawPosition ), 0.5f ); //XMVectorScale: Scalar multiplies a vector by a floating-point value vDiff = XMVectorSubtract( vFilteredPosition, vPrevFilteredPosition ); vTrend = XMVectorAdd( XMVectorScale( vDiff, smoothingParams.fCorrection ), XMVectorScale( vPrevTrend, 1.0f - smoothingParams.fCorrection ) ); m_pHistory[JointID].m_dwFrameCount++; } else { // A good filtering solution is usually a combination of various filtering techniques, which may include applying // a jitter removal filter to remove spike noise, a smoothing filter, and a forecasting filter to reduce latency, // and then adjusting the outputs based on person kinematics and anatomy to avoid awkward cases caused by overshoot. // First apply jitter filter vDiff = XMVectorSubtract( vRawPosition, vPrevFilteredPosition ); vLength = XMVector3Length( vDiff ); //Returns a vector. The length of vDiff is replicated into each component fDiff = fabs( XMVectorGetX( vLength ) ); if( fDiff <= smoothingParams.fJitterRadius ) { vFilteredPosition = XMVectorAdd( XMVectorScale( vRawPosition, fDiff / smoothingParams.fJitterRadius ), XMVectorScale( vPrevFilteredPosition, 1.0f - fDiff / smoothingParams.fJitterRadius ) ); } else // It should be determined not to be a jitter when the diff value is exceed radius threshold of parameter. In this case, It adopt raw value. { vFilteredPosition = vRawPosition; } //// Now the double exponential smoothing filter: // 1. S(t) = α*x(t) + (1-α)*(S(t-1)+b(t-1)) 0≤α≤1 // The first smoothing equation adjusts St. This helps to eliminate the lag and brings St to the appropriate base of the current value. vFilteredPosition = XMVectorAdd( XMVectorScale( vFilteredPosition, 1.0f - smoothingParams.fSmoothing ), XMVectorScale( XMVectorAdd( vPrevFilteredPosition, vPrevTrend ), smoothingParams.fSmoothing ) ); vDiff = XMVectorSubtract( vFilteredPosition, vPrevFilteredPosition ); // S(t)-S(t-1) // 2. b(t)= γ * (S(t)-S(t-1)) + (1-γ) * b(t-1) 0≤γ≤1 // The second smoothing equation then updates the trend, which is expressed as the difference between the last two values. vTrend = XMVectorAdd( XMVectorScale( vDiff, smoothingParams.fCorrection ), XMVectorScale( vPrevTrend, 1.0f - smoothingParams.fCorrection ) ); // 修正趋势值 } // 3. F(t+T) = S(t) + b(t)*T vPredictedPosition = XMVectorAdd( vFilteredPosition, XMVectorScale( vTrend, smoothingParams.fPrediction ) ); // Predict into the future to reduce latency // Check that we are not too far away from raw data vDiff = XMVectorSubtract( vPredictedPosition, vRawPosition ); vLength = XMVector3Length( vDiff ); fDiff = fabs( XMVectorGetX( vLength ) ); if( fDiff > smoothingParams.fMaxDeviationRadius ) { vPredictedPosition = XMVectorAdd( XMVectorScale( vPredictedPosition, smoothingParams.fMaxDeviationRadius / fDiff ), XMVectorScale( vRawPosition, 1.0f - smoothingParams.fMaxDeviationRadius / fDiff ) ); } // Save the data from this frame m_pHistory[JointID].m_vRawPosition = vRawPosition; m_pHistory[JointID].m_vFilteredPosition = vFilteredPosition; m_pHistory[JointID].m_vTrend = vTrend; // Output the data m_pFilteredJoints[JointID] = vPredictedPosition; m_pFilteredJoints[JointID] = XMVectorSetW( m_pFilteredJoints[JointID], 1.0f ); }

myKinect.h

#pragma once #include <Kinect.h> #include <opencv2\opencv.hpp> #include "KinectJointFilter.h" // Safe release for interfaces template<class Interface> inline void SafeRelease(Interface *& pInterfaceToRelease) // 参数为指针的引用 { if (pInterfaceToRelease != NULL) { pInterfaceToRelease->Release(); pInterfaceToRelease = NULL; } } class CBodyBasics { // kinect 2.0 的深度图像分辨率是424 * 512 static const int cDepthWidth = 512; static const int cDepthHeight = 424; public: CBodyBasics(); // Constructor ~CBodyBasics(); // Destructor void Update();// 获得骨架、背景二值图和深度信息 HRESULT InitializeDefaultSensor();// 用于初始化kinect private: // Current Kinect IKinectSensor* m_pKinectSensor; // kinect源 ICoordinateMapper* m_pCoordinateMapper; // 用于坐标变换 // Body reader IBodyFrameReader* m_pBodyFrameReader; // 用于骨架数据读取 void ProcessBody(IBody* pBody); // 处理指定的骨架,并且在屏幕上绘制出来 void DrawBone(const Joint* pJoints, const DepthSpacePoint* depthSpacePosition, JointType joint0, JointType joint1); // 画骨架函数 void DrawHandState(const DepthSpacePoint depthSpacePosition, HandState handState); // 画手的状态函数 void DrawBody(const Joint* pJoints, const DepthSpacePoint *depthSpacePosition); FilterDoubleExponential filter; // Holt Double Exponential Smoothing Filter IBody* GetActiveBody(IBody** ppBodies); // 获取最近的body FLOAT Angle(const DirectX::XMVECTOR* vec, JointType jointA, JointType jointB, JointType jointC); // Get joint angle ∠ABC in degree cv::Mat skeletonImg; // 显示图像的Mat };

myKinect.cpp

#include "myKinect.h" #include <iostream> using namespace DirectX; /// Initializes the default Kinect sensor HRESULT CBodyBasics::InitializeDefaultSensor() { // 搜索kinect sensor HRESULT hr = GetDefaultKinectSensor(&m_pKinectSensor); if (FAILED(hr)){ std::cerr << "Error : GetDefaultKinectSensor" << std::endl; return -1; } // 打开kinect hr = m_pKinectSensor->Open(); if (FAILED(hr)){ std::cerr << "Error : IKinectSensor::Open()" << std::endl; return -1; } // 从Sensor取得Source IBodyFrameSource* pBodyFrameSource = NULL; // 骨架数据源 hr = m_pKinectSensor->get_BodyFrameSource(&pBodyFrameSource); if (FAILED(hr)){ std::cerr << "Error : IKinectSensor::get_BodyFrameSource()" << std::endl; return -1; } // 从Source打开Reader hr = pBodyFrameSource->OpenReader(&m_pBodyFrameReader); if (FAILED(hr)){ std::cerr << "Error : IBodyFrameSource::OpenReader()" << std::endl; return -1; } SafeRelease(pBodyFrameSource); // coordinatemapper hr = m_pKinectSensor->get_CoordinateMapper(&m_pCoordinateMapper); if (!m_pKinectSensor || FAILED(hr)) { std::cout << "Kinect initialization failed!" << std::endl; return E_FAIL; } // skeletonImg,用于画骨架的MAT skeletonImg.create(cDepthHeight, cDepthWidth, CV_8UC3); skeletonImg.setTo(0); return hr; // indicates success or failure } /// Main processing function void CBodyBasics::Update() { // 每次先清空skeletonImg skeletonImg.setTo(0); // 如果丢失了kinect,则不继续操作 if (!m_pBodyFrameReader) { return; } IBodyFrame* pBodyFrame = NULL; HRESULT hr = m_pBodyFrameReader->AcquireLatestFrame(&pBodyFrame); // 获取骨架信息 if (SUCCEEDED(hr)) { IBody* ppBodies[BODY_COUNT] = { 0 }; // 每一个IBody可以追踪一个人,总共可以追踪六个人 // 把kinect追踪到的人的信息,分别存到每一个IBody中 hr = pBodyFrame->GetAndRefreshBodyData(_countof(ppBodies), ppBodies); IBody *bodyToTrack = NULL; bodyToTrack = GetActiveBody(ppBodies); // 获取最近的body // 处理最近的骨架,并且画出来 if (bodyToTrack) { ProcessBody(bodyToTrack); } for (int i = 0; i < _countof(ppBodies); ++i) { SafeRelease(ppBodies[i]); } } SafeRelease(pBodyFrame); // 必须要释放,否则之后无法获得新的frame数据 } // Finds the closest body from the sensor if any IBody* CBodyBasics::GetActiveBody(IBody** ppBodies) { IBody* bodyToTrack = NULL; float closestDistance = 10000.0f; // Start with a far enough distance BOOLEAN bTracked = false; for (int index = 0; index < BODY_COUNT; ++index) { Joint joint[25]; ppBodies[index]->GetJoints(25, joint); // 获得第一个关节数据(JointType_SpineBase = 0) float newDistance = joint[JointType_SpineBase].Position.Z; if (newDistance != 0 && newDistance <= closestDistance) { closestDistance = newDistance; bodyToTrack = ppBodies[index]; } } return bodyToTrack; } /// Handle new body data void CBodyBasics::ProcessBody(IBody* pBody) { HRESULT hr; BOOLEAN bTracked = false; hr = pBody->get_IsTracked(&bTracked); // Retrieves a boolean value that indicates if the body is tracked if (SUCCEEDED(hr) && bTracked) // 判断是否追踪到骨骼 { Joint joints[JointType_Count]; HandState leftHandState = HandState_Unknown; HandState rightHandState = HandState_Unknown; DepthSpacePoint *depthSpacePosition = new DepthSpacePoint[_countof(joints)]; // 存储深度坐标系中的关节点位置 pBody->get_HandLeftState(&leftHandState); // 获取左右手状态 pBody->get_HandRightState(&rightHandState); hr = pBody->GetJoints(_countof(joints), joints); // 获得25个关节点 if (SUCCEEDED(hr)) { /************************************************************************************************ // Raw Joint for (int j = 0; j < _countof(joints); ++j) { // 将关节点坐标从摄像机坐标系转到深度坐标系以显示 m_pCoordinateMapper->MapCameraPointToDepthSpace(joints[j].Position, &depthSpacePosition[j]); } *************************************************************************************************/ // Filtered Joint filter.Update(joints); const DirectX::XMVECTOR* vec = filter.GetFilteredJoints(); // Retrive Filtered Joints float angle = Angle(vec, JointType_WristRight, JointType_ElbowRight, JointType_ShoulderRight); // Get ElbowRight joint angle char s[10]; sprintf_s(s, "%.0f", angle); std::string strAngleInfo = s; putText(skeletonImg, strAngleInfo, cvPoint(0, 50), CV_FONT_HERSHEY_COMPLEX, 0.5, cvScalar(0, 0, 255)); for (int type = 0; type < JointType_Count; type++) { if (joints[type].TrackingState != TrackingState::TrackingState_NotTracked) { float x = 0.0f, y = 0.0f, z = 0.0f; // Retrieve the x/y/z component of an XMVECTOR Data and storing that component's value in an instance of float referred to by a pointer DirectX::XMVectorGetXPtr(&x, vec[type]); DirectX::XMVectorGetYPtr(&y, vec[type]); DirectX::XMVectorGetZPtr(&z, vec[type]); CameraSpacePoint cameraSpacePoint = { x, y, z }; m_pCoordinateMapper->MapCameraPointToDepthSpace(cameraSpacePoint, &depthSpacePosition[type]); } } DrawBody(joints, depthSpacePosition); DrawHandState(depthSpacePosition[JointType_HandLeft], leftHandState); DrawHandState(depthSpacePosition[JointType_HandRight], rightHandState); } delete[] depthSpacePosition; } cv::imshow("skeletonImg", skeletonImg); cv::waitKey(5); // 延时5ms } FLOAT CBodyBasics::Angle(const DirectX::XMVECTOR* vec, JointType jointA, JointType jointB, JointType jointC) { float angle = 0.0; XMVECTOR vBA = XMVectorSubtract(vec[jointB], vec[jointA]); XMVECTOR vBC = XMVectorSubtract(vec[jointB], vec[jointC]); XMVECTOR vAngle = XMVector3AngleBetweenVectors(vBA, vBC); angle = XMVectorGetX(vAngle) * 180.0 * XM_1DIVPI; // XM_1DIVPI: An optimal representation of 1 / π return angle; } // 画手的状态 void CBodyBasics::DrawHandState(const DepthSpacePoint depthSpacePosition, HandState handState) { const int radius = 20; const cv::Vec3b blue = cv::Vec3b(128, 0, 0), green = cv::Vec3b(0, 128, 0), red = cv::Vec3b(0, 0, 128); // 给不同的手势分配不同颜色 CvScalar color; switch (handState){ case HandState_Open: color = green; break; case HandState_Closed: color = red; break; case HandState_Lasso: color = blue; break; default: // 如果没有确定的手势,就不要画 return; } circle(skeletonImg, cvPoint(depthSpacePosition.X, depthSpacePosition.Y), radius, color, 4); } /// Draws one bone of a body (joint to joint) void CBodyBasics::DrawBone(const Joint* pJoints, const DepthSpacePoint* depthSpacePosition, JointType joint0, JointType joint1) { TrackingState joint0State = pJoints[joint0].TrackingState; TrackingState joint1State = pJoints[joint1].TrackingState; // If we can't find either of these joints, exit if ((joint0State == TrackingState_NotTracked) || (joint1State == TrackingState_NotTracked)) { return; } // Don't draw if both points are inferred if ((joint0State == TrackingState_Inferred) && (joint1State == TrackingState_Inferred)) { return; } CvPoint p1 = cvPoint(depthSpacePosition[joint0].X, depthSpacePosition[joint0].Y), p2 = cvPoint(depthSpacePosition[joint1].X, depthSpacePosition[joint1].Y); // We assume all drawn bones are inferred unless BOTH joints are tracked if ((joint0State == TrackingState_Tracked) && (joint1State == TrackingState_Tracked)) { line(skeletonImg, p1, p2, cvScalar(255, 128, 0), 3); // 线宽为3 } else { line(skeletonImg, p1, p2, cvScalar(100, 100, 100), 1); // 线宽为1 } } /// Draws a body void CBodyBasics::DrawBody(const Joint* pJoints, const DepthSpacePoint *depthSpacePosition) { //---------------------------Torso------------------------------- DrawBone(pJoints, depthSpacePosition, JointType_Head, JointType_Neck); DrawBone(pJoints, depthSpacePosition, JointType_Neck, JointType_SpineShoulder); DrawBone(pJoints, depthSpacePosition, JointType_SpineShoulder, JointType_SpineMid); DrawBone(pJoints, depthSpacePosition, JointType_SpineMid, JointType_SpineBase); DrawBone(pJoints, depthSpacePosition, JointType_SpineShoulder, JointType_ShoulderRight); DrawBone(pJoints, depthSpacePosition, JointType_SpineShoulder, JointType_ShoulderLeft); DrawBone(pJoints, depthSpacePosition, JointType_SpineBase, JointType_HipRight); DrawBone(pJoints, depthSpacePosition, JointType_SpineBase, JointType_HipLeft); // -----------------------Right Arm ------------------------------------ DrawBone(pJoints, depthSpacePosition, JointType_ShoulderRight, JointType_ElbowRight); DrawBone(pJoints, depthSpacePosition, JointType_ElbowRight, JointType_WristRight); DrawBone(pJoints, depthSpacePosition, JointType_WristRight, JointType_HandRight); //DrawBone(pJoints, depthSpacePosition, JointType_HandRight, JointType_HandTipRight); //DrawBone(pJoints, depthSpacePosition, JointType_WristRight, JointType_ThumbRight); //----------------------------------- Left Arm-------------------------- DrawBone(pJoints, depthSpacePosition, JointType_ShoulderLeft, JointType_ElbowLeft); DrawBone(pJoints, depthSpacePosition, JointType_ElbowLeft, JointType_WristLeft); DrawBone(pJoints, depthSpacePosition, JointType_WristLeft, JointType_HandLeft); //DrawBone(pJoints, depthSpacePosition, JointType_HandLeft, JointType_HandTipLeft); //DrawBone(pJoints, depthSpacePosition, JointType_WristLeft, JointType_ThumbLeft); // ----------------------------------Right Leg-------------------------------- DrawBone(pJoints, depthSpacePosition, JointType_HipRight, JointType_KneeRight); DrawBone(pJoints, depthSpacePosition, JointType_KneeRight, JointType_AnkleRight); DrawBone(pJoints, depthSpacePosition, JointType_AnkleRight, JointType_FootRight); // -----------------------------------Left Leg--------------------------------- DrawBone(pJoints, depthSpacePosition, JointType_HipLeft, JointType_KneeLeft); DrawBone(pJoints, depthSpacePosition, JointType_KneeLeft, JointType_AnkleLeft); DrawBone(pJoints, depthSpacePosition, JointType_AnkleLeft, JointType_FootLeft); // Draw the joints except the last four(HandTipLeft,ThumbLeft,HandTipRight,ThumbRight) for (int i = 0; i < JointType_Count-4; ++i) { if (pJoints[i].TrackingState == TrackingState_Inferred) { circle(skeletonImg, cvPoint(depthSpacePosition[i].X, depthSpacePosition[i].Y), 3, cvScalar(64, 255, 0), -1); } else if (pJoints[i].TrackingState == TrackingState_Tracked) { circle(skeletonImg, cvPoint(depthSpacePosition[i].X, depthSpacePosition[i].Y), 3, cvScalar(255, 255, 0), -1); } } } /// Constructor CBodyBasics::CBodyBasics() : m_pKinectSensor(NULL), m_pCoordinateMapper(NULL), m_pBodyFrameReader(NULL) { // Some smoothing with little latency (defaults). // Only filters out small jitters. // Good for gesture recognition in games. //defaultParams = { 0.5f, 0.5f, 0.5f, 0.05f, 0.04f }; // Smoothed with some latency. // Filters out medium jitters. // Good for a menu system that needs to be smooth but doesn't need the reduced latency as much as gesture recognition does. //somewhatLatentParams = { 0.5f, 0.1f, 0.5f, 0.1f, 0.1f }; // Very smooth, but with a lot of latency. // Filters out large jitters. // Good for situations where smooth data is absolutely required and latency is not an issue. //verySmoothParams = { 0.7f, 0.3f, 1.0f, 1.0f, 1.0f }; // Setting Smoothing Parameter float smoothing = 0.5f; // [0..1], lower values closer to raw data, passing 0 causes the raw data to be returned float correction = 0.1f; // [0..1], a lower value corrects more slowly and appears smoother float prediction = 0.5f; // [0..n], the number of frames to predict into the future(A value greater than 0.5 will likely lead to overshoot when the data changes quickly) float jitterRadius = 0.1f; // The radius in meters for jitter reduction float maxDeviationRadius = 0.1f; // The maximum radius in meters that filtered positions are allowed to deviate from raw data filter.Init(smoothing, correction, prediction, jitterRadius, maxDeviationRadius); } /// Destructor CBodyBasics::~CBodyBasics() { SafeRelease(m_pBodyFrameReader); SafeRelease(m_pCoordinateMapper); if (m_pKinectSensor) { m_pKinectSensor->Close(); } SafeRelease(m_pKinectSensor); }

main.cpp

#include "myKinect.h" #include <iostream> using namespace std; int main() { CBodyBasics myKinect; HRESULT hr = myKinect.InitializeDefaultSensor(); if (SUCCEEDED(hr)){ while (1){ myKinect.Update(); } } else{ cout << "kinect initialization failed!" << endl; system("pause"); } return 0; }

参考:

Kinect 2.0 + OpenCV 显示深度数据、骨架信息、手势状态和人物二值图

Kinect for Windows SDK v2 Sample Program

Skeletal Joint Smoothing White Paper